What Separates Large-Scale Web Scraping From Traditional Scraping Methods?

Large-scale web scraping is pivotal for businesses as it automates data collection, tracks market trends, and powers machine-learning models. However, its scope extends beyond mere data extraction and trend analysis. Large-scale web scraping demands meticulous planning, skilled personnel, and robust infrastructure.

This article delves into the nuances of this process, highlighting its distinctions from conventional methods and providing insights into building, executing, and sustaining scrapers at scale. It also tackles vital challenges inherent to large-scale web scraping operations.

Web scraping product data is central to its utility, as it empowers businesses with actionable insights crucial for competitive analysis and strategic decision-making. By harnessing large-scale extraction for example eCommerce data scraping, Food Data Scraping, Grocery Data Scraping, Travel Data Scraping, companies can streamline data-driven processes, optimize market strategies, and maintain a competitive edge in dynamic industries. The discussion encompasses best practices for handling data volumes, navigating legal considerations, and ensuring scalability and reliability in web data collection.

Comparing Large-Scale Web Scraping with Regular Web Scraping

Large-scale web scraping API and regular web scraping differ significantly in their approach, scope, and operational requirements, catering to distinct needs and challenges within the realm of data extraction and analysis:

Scope and Volume:

- Regular Web Scraping: Typically involves extracting data from a limited number of websites or specific web pages. It focuses on collecting manageable datasets, often related to niche research or monitoring.

- Large-scale web Scraping: It involves collecting extensive data across numerous sources or entire websites. It aims to gather comprehensive datasets spanning large volumes of information essential for robust analysis and insights.

Infrastructure Requirements:

- Regular Web Scraping: Generally executed using lightweight tools and frameworks such as BeautifulSoup or Scrapy, running on local machines or small-scale servers. It requires minimal computational resources and infrastructure setup.

- Large-scale web Scraping: It demands a sophisticated infrastructure capable of handling massive data volumes and complex operations. This includes distributed computing frameworks (e.g., Apache Spark), cloud services for scalability (e.g., AWS, Google Cloud), and efficient data storage solutions (e.g., databases and data lakes). The infrastructure must support parallel processing, fault tolerance, and high availability to manage the scale and variability of scraped data.

Automation and Maintenance:

- Phone Numbers: Direct contact numbers for customer inquiries.

- Email Addresses: Communication channels for corporate or business inquiries.

- Manager Contacts: Specific contact information for store managers.

4. Services Offered

Regular Web Scraping: Often manually initiated or scheduled for periodic updates by small teams or individual users. Maintenance involves ensuring scripts remain functional and adapting to minor website structure changes.

Large-scale web Scraping: It requires automated processes to handle continuous data extraction and frequent updates across multiple sources. It involves building robust pipelines for data ingestion, transformation, and storage. Proactive maintenance is essential to address challenges such as IP blocking, changes in website structure, and ensuring data consistency and integrity over time.

Legal and Ethical Considerations:

- Regular Web Scraping: Due to data extraction's limited scope and impact, adherence to website terms of service, robots.txt guidelines, and general data protection regulations (GDPR) is typically manageable.

- Large-Scale Web Scraping: Due to data collection's volume and potential impact, it faces heightened scrutiny and legal complexities. Compliance with data privacy laws, intellectual property rights, and ethical guidelines (e.g., respecting website terms and user consent) becomes crucial. This requires robust frameworks for monitoring and ensuring adherence to legal requirements across large-scale operations

Business Applications:

- Regular Web Scraping: It primarily serves specific needs like market research, competitor analysis, or gathering insights for niche applications.

- Large-Scale Web Scraping: Essential for comprehensive market analysis, trend forecasting, machine learning model training, and supporting complex business decisions. It enables businesses to derive actionable insights from extensive datasets, enhancing strategic planning, operational efficiency, and competitive advantage.

Challenges:

- It faces fewer scalability, data storage, and processing speed challenges than large-scale operations. It can usually be managed with straightforward data extraction and basic processing requirements.

- It deals with complexities such as handling diverse data formats, overcoming IP blocking and rate limits, managing proxy rotation, and maintaining data consistency across extensive datasets. Critical considerations include scalability issues related to processing speed, data storage costs, and ensuring the high availability of scraping infrastructure.

In summary, while regular web scraping fulfills specific data collection needs on a smaller scale, large-scale web scraping is essential for enterprises aiming to harness vast datasets for strategic insights, competitive positioning, and operational excellence. It requires robust infrastructure, advanced automation, stringent compliance measures, and proactive maintenance to manage the complexities and scale of modern data-driven environments effectively.

When is Large Scale Web Scraping Required?

Large-scale web scraping is required when businesses need to:

- Extract Comprehensive Data: Gather extensive datasets from multiple sources or entire websites for in-depth analysis and insights.

- Support Machine Learning: Train robust machine learning models with large volumes of diverse data to enhance predictive capabilities.

- Monitor Market Dynamics: Track and analyze dynamic market trends, pricing fluctuations, and competitor strategies across a broad spectrum.

- Enable Strategic Decision-Making: Inform strategic decisions with comprehensive, real-time data on consumer behavior, product trends, and industry developments.

- Enhance Operational Efficiency: Optimize processes such as inventory management, pricing strategies, and marketing campaigns through data-driven insights.

- Scale Business Operations: Facilitate scalability by automating data extraction and processing to handle growing data volumes efficiently.

Large-scale web scraping becomes necessary when businesses require extensive, detailed data to drive informed decisions, enhance competitiveness, and capitalize on market opportunities effectively.

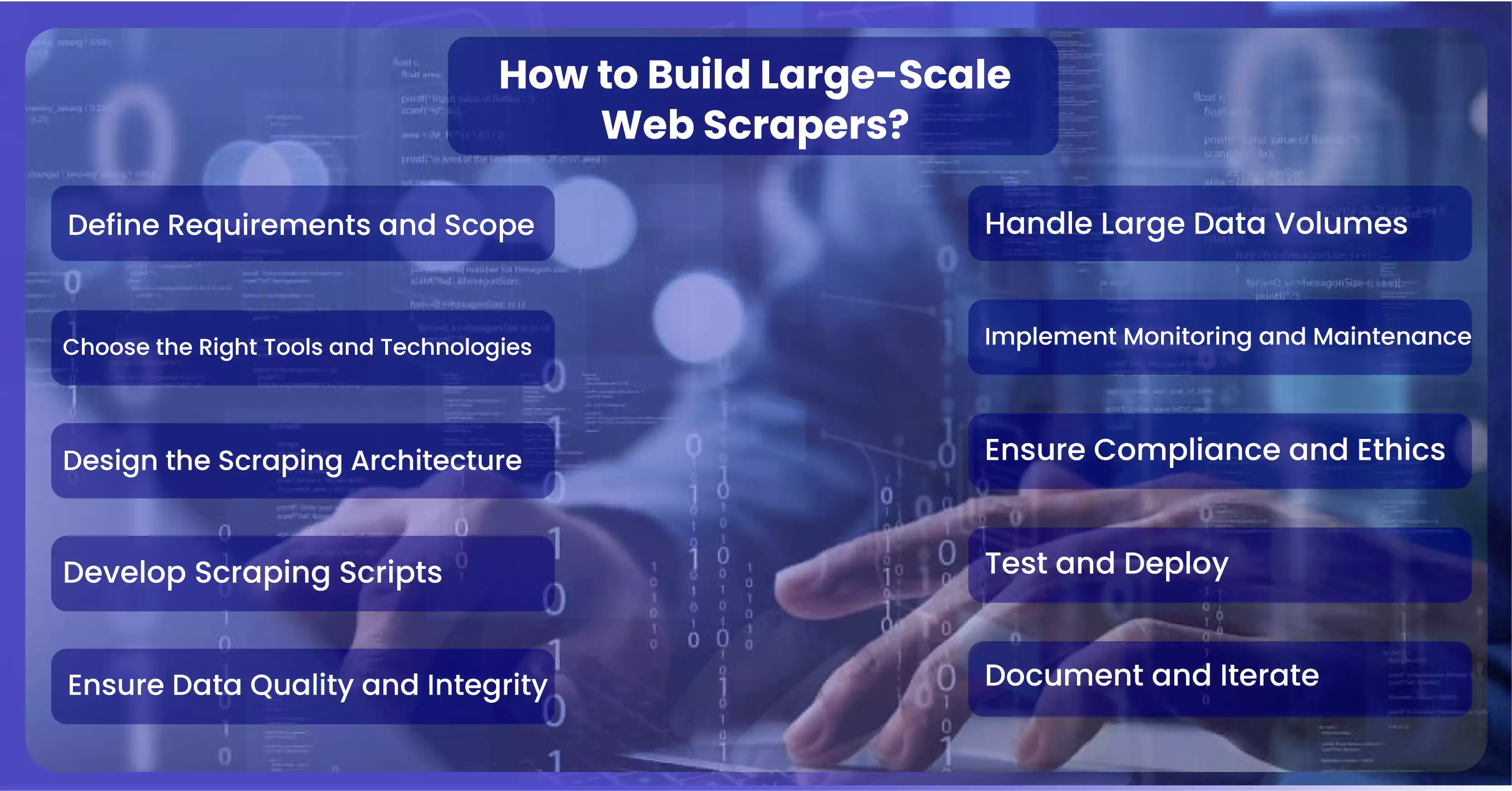

How to Build Large-Scale Web Scrapers?

Define Requirements and Scope:

- Identify the specific data sources, types of information needed, and the scale of data to be scraped.

- Determine the frequency of scraping and the required level of data granularity.

Choose the Right Tools and Technologies:

- Select programming languages (e.g., Python, Java) and frameworks (e.g., Scrapy, BeautifulSoup), suitable for handling large-scale data extraction.

- Consider using distributed computing frameworks (e.g., Apache Spark) and cloud services (e.g., AWS, Google Cloud) for scalability and efficient data processing.

Design the Scraping Architecture:

- Plan a scalable architecture that includes data extraction, transformation, and storage components.

- Implement parallel processing and distributed computing strategies to handle large volumes of data.

Develop Scraping Scripts:

- Write robust scraping scripts using chosen frameworks and libraries to extract data from target websites.

- Implement error handling mechanisms to manage issues like website structure changes, network errors, and IP blocking.

Ensure Data Quality and Integrity:

- Validate scraped data to ensure accuracy, completeness, and consistency.

- Implement checks and validations during data extraction and processing to maintain data integrity.

Handle Large Data Volumes:

- Optimize data storage solutions (e.g., databases, data lakes) to handle large volumes of scraped data.

- Use efficient data storage formats and indexing techniques for quick access and retrieval.

Implement Monitoring and Maintenance:

- Set up monitoring tools to track performance, detect failures, and manage resources effectively.

- Establish automated alerts and notifications for issues like scraping failures or data anomalies.

- Regularly update scraping scripts to adapt to changes in website structures and maintain functionality.

Ensure Compliance and Ethics:

- Adhere to legal requirements and ethical standards related to web scraping, including respecting website terms of service and data privacy laws.

- Implement measures to avoid overloading target websites and respect rate limits to prevent IP blocking.

Test and Deploy:

- Conduct thorough testing of the extractor in staging environments to ensure functionality, performance, and data quality.

- Deploy the scraper to production environments gradually, monitoring its performance and making adjustments as necessary.

Document and Iterate:

- Document its architecture, processes, and configurations for future reference and maintenance.

- Continuously iterate based on feedback, data requirements changes, and technology improvements.

Building a large-scale web scraping tool requires expertise in web technologies, data handling, and software engineering practices to extract, process, and utilize vast amounts of data for business insights and decision-making.

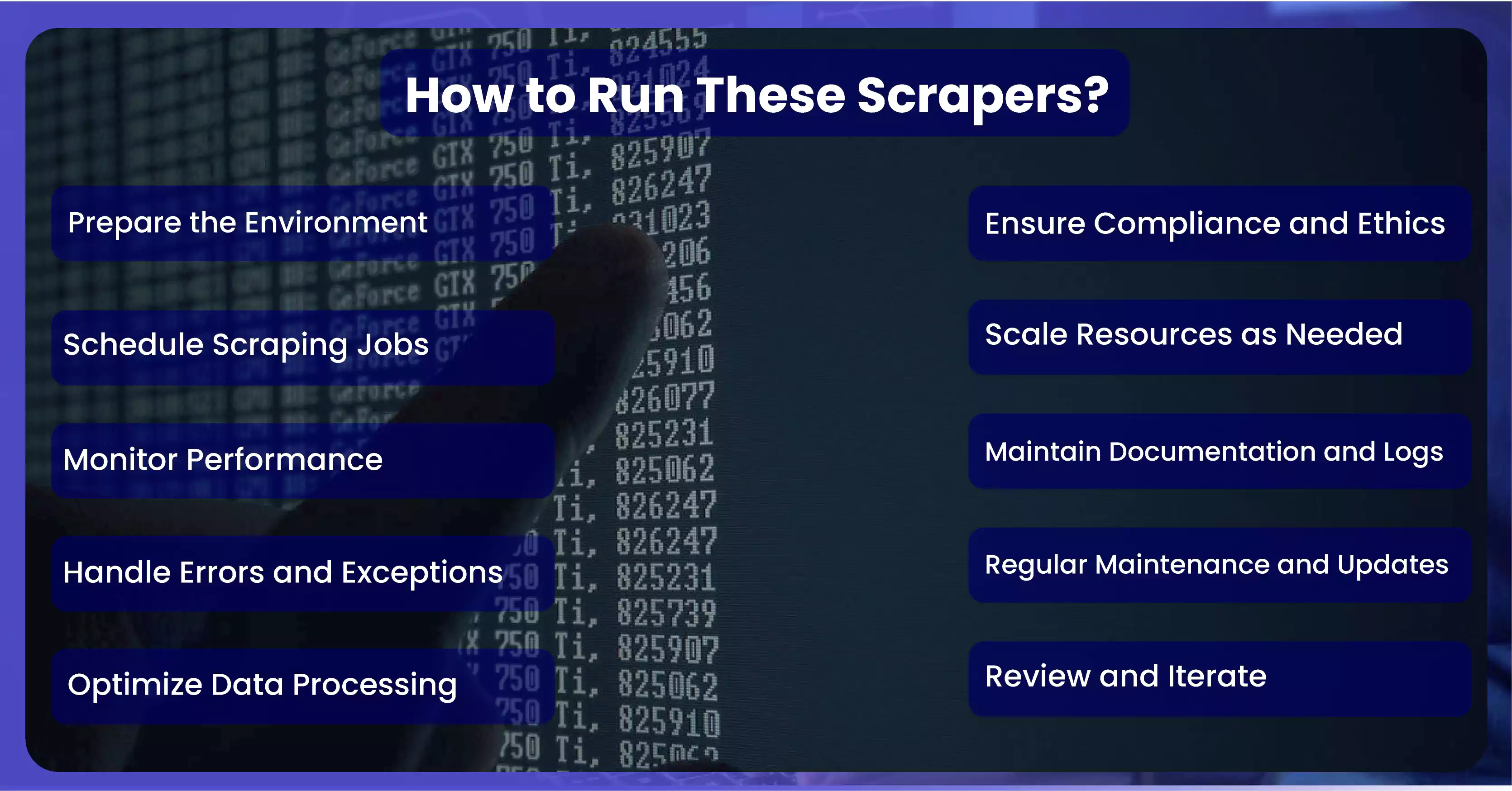

How to Run These Scrapers?

Running large-scale tools involves managing complex processes to efficiently collect and process vast amounts of data from multiple sources. Here's a step-by-step guide on how to effectively run large-scale web extractors:

Prepare the Environment:

- Ensure that the infrastructure and resources (servers, cloud services) required for running are set up and configured for scalability.

- Verify that all necessary software dependencies and libraries are installed and up to date.

Schedule Scraping Jobs:

- Set up a scheduling system (e.g., cron jobs, task schedulers) to automate the execution of scraping tasks at regular intervals.

- Define the frequency of scraping jobs based on data update requirements and website policies (e.g., respecting rate limits).

Monitor Performance:

- Implement monitoring tools to track the performance, including data extraction rates, processing times, and resource utilization (CPU, memory, network).

- Set up alerts and notifications for critical issues such as scraping failures, network errors, or exceeding rate limits.

Handle Errors and Exceptions:

- Develop robust error-handling mechanisms within the extractor to manage and recover from common issues like website structure changes, timeouts, or IP blocking.

- Implement retry mechanisms with back-off strategies to handle transient errors and ensure data continuity.

Optimize Data Processing:

- Utilize parallel processing techniques and distributed computing frameworks (e.g., Apache Spark) to efficiently handle large volumes of scraped data.

- Optimize data storage and retrieval mechanisms (e.g., indexing, caching) to improve performance and responsiveness.

Ensure Compliance and Ethics:

- While running, continuously monitor and adhere to legal requirements, website terms of service, and data privacy regulations.

- Implement measures to respect rate limits, avoid overloading target websites, and maintain ethical scraping practices.

Scale Resources as Needed:

- Monitor resource usage and scale up or down resources (e.g., servers, cloud instances) based on workload demands and performance metrics.

- Implement auto-scaling capabilities in cloud environments to dynamically adjust resources in response to varying scraping loads.

Maintain Documentation and Logs:

- Keep detailed documentation of scraping jobs, configurations, and operational procedures for reference and troubleshooting.

- Maintain logs of scraping activities, errors encountered, and actions taken to ensure accountability and facilitate post-mortem analysis.

Regular Maintenance and Updates:

- Schedule regular maintenance tasks to update scraping scripts, libraries, and dependencies to adapt to changes in website structures or technology advancements.

- Conduct periodic reviews and optimizations of scraping processes to improve efficiency, reliability, and compliance.

Review and Iterate:

- Continuously monitor the effectiveness and performance through analytics and feedback loops.

- Incorporate feedback from stakeholders and users to iterate on the functionality, features, and data quality improvements.

It requires careful planning, proactive monitoring, and adherence to best practices to ensure consistent and reliable data extraction, processing, and utilization for business insights and decision-making.

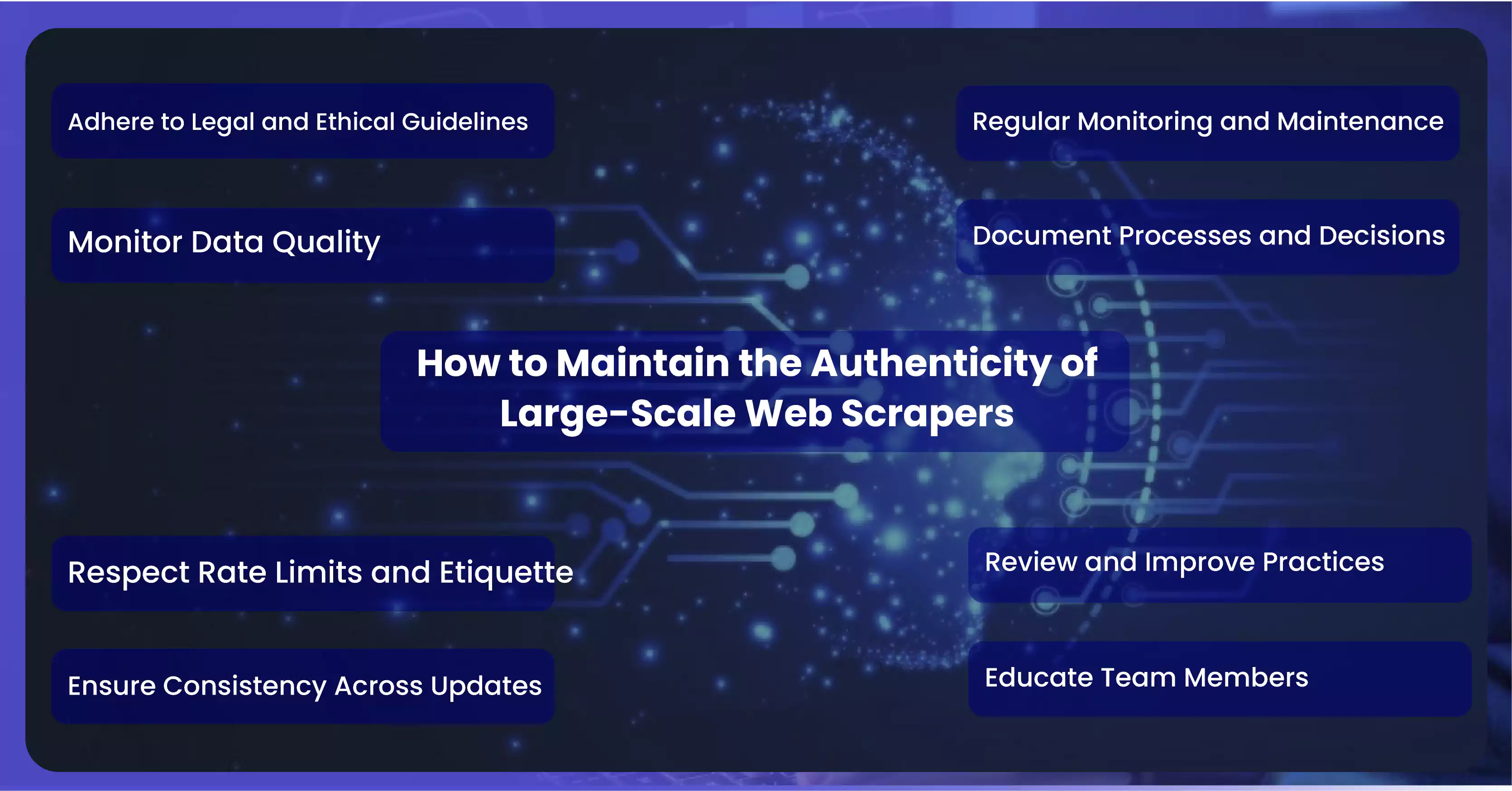

How to Maintain the Authenticity of Large-Scale Web Scrapers

Maintaining the authenticity of large-scale web scrapers involves ensuring that the scraped data is accurate, reliable, and obtained ethically. Here's how to maintain authenticity:

Adhere to Legal and Ethical Guidelines:

- Respect Website Terms: Follow target websites' terms of service and robots.txt guidelines. Avoid scraping prohibited content or violating access restrictions.

- Comply with Data Privacy Laws: When handling personal or sensitive information, ensure compliance with data protection regulations (e.g., GDPR, CCPA).

Data Quality:

- Validate Data Integrity: Implement validation checks during data extraction and processing to verify accuracy and completeness.

- Handle Errors: Develop error-handling mechanisms to effectively manage exceptions, network failures, and data inconsistencies.

Respect Rate Limits and Etiquette:

- Implement Rate Limiting: Adhere to website-specific rate limits to avoid overloading servers and getting IP blocked. Use delay strategies between requests.

- Rotate IPs and User Agents: Rotate IP addresses and simulate different user agents to mimic human browsing behavior and avoid detection.

Ensure Consistency Across Updates:

- Handle Website Changes: Monitor and adapt to changes in website structures or content layouts to maintain scraping functionality.

- Version Control: Use versioning for scraping scripts and configurations to track changes and ensure consistency in data extraction methods.

Regular Monitoring and Maintenance:

- Monitor Performance: Use monitoring tools to track performance metrics like response times, throughput, and error rates.

- Scheduled Checks: Conduct regular audits and checks to verify data accuracy and identify anomalies or discrepancies.

Document Processes and Decisions:

- Document Scraping Methods: Maintain detailed documentation of scraping methods, parameters, and configurations used for data extraction.

- Record Changes: Document changes to scraping scripts, including updates to adapt to website modifications or improve efficiency.

Review and Improve Practices:

- Continuous Improvement: Review scraping practices periodically to incorporate best practices, optimize performance, and enhance data quality.

- Feedback Mechanisms: Seek feedback from stakeholders and users to identify areas for improvement and address concerns about data authenticity.

Educate Team Members:

- Training and Awareness: Train team members in scraping operations on ethical practices, legal considerations, and data privacy requirements.

- Code of Conduct: Establish a code of conduct for scraping activities to ensure ethical behavior and compliance with organizational policies.

By implementing these practices, businesses can maintain the authenticity of large-scale web extracting tool, ensuring that the data obtained is reliable, legally obtained, and aligned with ethical standards. Large-scale web scraping services foster trust in the data-driven insights derived from web scraping activities, supporting informed decision-making and strategic planning.

Conclusion: Large-scale web scraping is critical for businesses harnessing vast data for strategic insights and operational efficiencies. Building robust tools involves meticulous planning, leveraging scalable infrastructure, and implementing advanced automation. Running botsrequires careful monitoring of performance, adherence to legal and ethical standards, and proactive handling of data quality and website changes. Maintenance involves continuous optimization, documentation of processes, and adherence to best practices to ensure the authenticity and reliability of scraped data. Mastering large-scale web scraping ultimately empowers organizations to stay agile, competitive, and informed in an increasingly data-driven landscape..

Discover unparalleled web scraping service or mobile app data scraping offered by iWeb Data Scraping. Our expert team specializes in diverse data sets, including retail store locations data scraping and more. Reach out to us today to explore how we can tailor our services to meet your project requirements, ensuring optimal efficiency and reliability for your data needs.